Safe Harbor Statement (AKA how you know it's worth a read).

In this post, I'll make statements related to our business that may be considered forward- looking within the meaning of Section 27A of the Securities Exchange Act of 1933 as amended and Section 21E of the Securities Exchange Act of 1934 as amended. All statements other than statements of historical fact are forward-looking statements or the opinions and observations of Jon McLaren

Generative AI (Artificial Intelligence) is constantly being talked about right now. As a developer it also reminds me of both my childhood, and something far older than me. Ever heard of the Mechanical Turk? I'm not referring to the service by Amazon with that name. I'm referring to what it was named after.

The Mechanical Turk also known as the "Automaton Chess Player" was a chess playing machine constructed in 1770. It resembled a man sitting at a chessboard that was sitting on a large cabinet. The mechanical arm on the man was capable of moving pieces.

For 84 years it was exhibited on tours by various owners as an automaton. It was capable of playing a strong game of chess against human opponents, often the best human chess players. The table on which the chess board sat was a cabinet with all of the mechanical workings that made the mechanical turk able to play chess. On one side, some gears and cogs and different clockwork working parts, wiring, brass parts and fairly empty space on the other side, which when the other side of the cabinet was open you could see clear through the table. An impressive mechanical feat.

Except the most crucial part of the machine wasn't something the audience ever saw.

I grew up enjoying magic tricks. I very quickly got past that childlike stage where you believe it's real. I just really enjoyed guessing and learning how the tricks were done. I watched David Blaine, David Copperfield, Criss Angel, the Masked Magician and more. That curiosity and enjoyment of being willfully tricked into seeing, hearing or feeling something that didn't happen as if it did is amazing. That's kept on into my adulthood. The magicians that stayed the most interesting to me from childhood to adulthood were Penn and Teller.

Penn and Teller have always done things, a little different. Even people who don't care for magic tricks etc, often know that Teller never talks when on stage. He can and does talk, and is actually the brains behind the duo. It's both a misdirection and a way for Teller to avoid public speaking. When I said they do things differently though I actually was referring to the tricks themselves. Penn and Teller became popular for showing the audience how the tricks were done as they were doing the tricks. From doing gravity defying tricks on TV, where the live studio audience was actually in-on the trick. Doing the oldest known magic trick, the cups and balls, but with clear plastic cups and shiny tinfoil balls. To doing magic tricks that actually look like you're just doing something completely mundane, like lighting a cigarette, smoking it and putting it out. They not only show the trick, they tell, and show you how they're doing it. You're still left amazed and often even laughing despite knowing exactly what's happening.

Honestly not too different from a Penn and Teller trick done on the Late Late Show with James Corden, the trick is called "Lift off for love" named after the showtune played as the trick is performed.Teller gets into a box that has the drawing of an astronaut on it. Penn removes its head, then the torso, places the legs upside down, moves the head to the opposite side of the stage. The whole time Teller keeps showing that he's still inside each of the boxes by waving handkerchiefs, waving his legs, revealing his face in time with the music "Blast off!". Penn re-stacks the boxes in the correct orientation, Teller steps out. Then Penn and Teller remove all of their props and replace them with clear versions, the stage they're on changes to reveal that despite it looking like you could see under it, you couldn't. Penn and Teller proceed to repeat the trick with a similar slower song, replacing "Blast off!" with "Trap door!". Teller hilariously slides around under the stage putting his hands, head, and legs inside the box props through trap doors.

The Mechanical Turk as you probably guessed was actually an illusion.

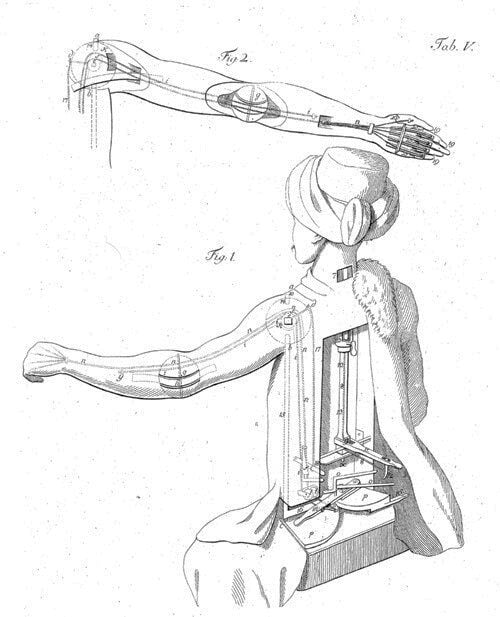

Sketch on copper of what the mechanical turk and it's cabinet looked like. Public Domain, source

Sketch on copper of what the mechanical turk and it's cabinet looked like. Public Domain, source

The inside of the Mechanical Turk cabinet was all misdirection. Inside, a human chess master would slide on a seat between the two sides of the cabinet based on when the cabinet doors would be opened to show the audience. The sliding seat would cause dummy machinery to slide into place concealing the person and further misdirecting. The clockwork parts on the one side were only a shallow part of the cabinet, leaving room for the person to hide behind it. When the game was playing, the human was able to tell where the chess pieces were on the board due to the pieces being magnetic, causing magnets on a string to indicate which pieces have moved and to where. The inside of the cabinet contained a pegboard chess board connected to a pantograph-style series of levers that controlled the left mechanical arm of the "automaton". The hidden chess player could move the Turk's arm up and down, and then control grasping the pieces. All of this was illuminated by candle light which had a ventilation system through the fake human. The hidden chess player could also control some facial expressions on the dummy as well as produce some sounds to add to the illusion.

Just because the Mechanical Turk was fake didn't mean it was useless. Magic tricks aren't useless—they entertain, invite curiosity, give you an escape from things that cause you stress. In that moment, you want to know how it was done. You can appreciate the weirdly specific skills someone has mastered. Those skills themselves are used by all manner of professions from entertainment like filming movies, marketing, to covert operations. That's right, James Bond is a magician who likes his martini shaken, not stirred.

As someone with an engineering mind who grew up enjoying magic tricks I'm equally fascinated by the way the trick is actually done as I am with the bewildering performance itself. To be honest, my entire career has revolved around magic in a way. As a programmer, our job is to create the illusion that inanimate objects like sand, plastic, and metal can think. The first computers were mechanical. Modern computers are largely electrical translations of the original mechanical ideas, continuously miniaturized and added onto. We're so damned good at it that it's genuinely useful.

I hated homework growing up. One of the first useful programs I created was a program to do my math and chemistry homework for me: Formula Conquerer. It created the illusion I was doing my homework. It not only did the calculations and gave me the answer, but it showed me how it got to its answers. Math and chemistry homework changed from taking hours to taking a few minutes of just copying down what my program told me. The interface for interacting with it was kind of like a chat or as my now more experienced developer mind would refer to it, a rudimentary CLI (Command Line Interface). It could only handle inputs it was programmed to, unlike an LLM (Large Language Model). I had shown it to my programming teacher, who was also at one point my math teacher - he was genuinely impressed and told me he had no problem with me using it for homework because to build it, I had to first understand the math. He just told me I wasn't allowed to distribute it or use it on tests. Little did he know, though, that python code was already converted to work on the good ol' fashioned TI-83 graphing calculator and despite trying to hold up my end of that agreement, it might have made it onto some other calculators where people may not have been as honest about it as me.

An LLM, like a CLI or GUI (graphical user interface) is probably best described as an interface. A way of telling the computer what you want, and it returns a response, via text rather than through imagery or another means.

Often when you're interacting with an LLM, behind the scenes a more traditional program is acting on a task and providing its output back to the LLM to return to you in a way that feels conversational. Kinda like the chess player hiding in the Mechanical Turk cabinet. An example is Perplexity.AI - which, in my opinion, is a fantastic search and research tool. I appreciate how transparent the company is as to what it's doing. The LLM provides the interface for the user to interact and receive information. Behind the scenes, though, the LLM is taking your questions and converting them into search keywords. Those keywords are fed into search queries that are likely done through an API (Application Programming Interface). The company may run another LLM to process the pages found in the first few results to attempt to validate and summarize the information. Then that gets passed to an LLM to respond to you. They then show you the information that came up in the search results and try to identify which words in the returned info to associate the links with.

LLMs, unlike most other interfaces, are a bit unique as an interface because they're actually capable of doing some things themselves as opposed to just taking your input, passing it to another program and returning the output. LLMs are text prediction engines, like your phone keyboard's next word prediction engine, but powered by more data and often server farms in the background. They predict one token at a time. A token being a small series of text characters (about 4 on average), so much of the time not full words but partial words, numbers, code etc. until they produce the full result you wanted. They have a bit of randomness to them to prevent them returning the data they were trained on, and ultimately enabling them to write more natural, human-like text. This randomness is also the reason they hallucinate. They're amazing at summarizing, rewording, and finding things in large swathes of text. This also works great for text tasks that aren't just human language: like code, CSVs etc.

Tools like Perplexity, HubSpot's Breeze, Content Remix, and more are early AI agents and agentic systems. Tools that are composed of LLMs being used in sequence with traditional programs or APIs. The Agent definition, like the term AI itself, is a little bit vague. Not all agents start and end with a chat interface, but many will. The thread behind the scenes that will connect them all is that they will all use a generative AI or machine learning tool somewhere in their work. Perplexity and Content Remix are what is known as Directed Acyclic Graph (DAG) or workflow based agentic systems. They have a prescribed flow, may have some decisions they can make to execute a task but largely the sequence is predetermined by their creator.

Where things get complicated is that LLM APIs are also becoming agentic themselves, involving tools behind the scenes - able to do things that you can't do, or do as well with a local open source model alone. For example, having access to search (like Google Gemini Pro), or a knowledge base, or calculators to help them respond more accurately. LLM's are known for being bad at math, bad at counting letters in words etc, and the reason for this is they don't see the words, they don't see the math equation. They operate on tokens and with math and letters they need to operate on fragments of a token, something they don't do.

There's a couple ways to solve the problem (that I know of), one, either train the LLMs on a ton of math problems to the point that they start predicting the answers properly, or two, you give them a virtual calculator when you're confident they were given a math problem. As we know, the number of possible math problems is infinite. We know that many AI tools have been trained on mathematical proofs, so my speculation is that the big AI companies are using a hybrid of the two as that might produce the best results.

As those APIs get more sophisticated, the more powerful agents themselves become. We will continue to see the lines blurred because companies aren't going to reveal their secrets to how they're getting their tools to do the things they do, hiding much of it behind an LLM interface. The LLM you're chatting with might be using a whole network of tools behind the scenes, and even interacting with other agents to get tasks done. Multi-modal AI often (but not always) combines multiple different machine learning tools together to do things like identify what is in an image, and then pass that to the LLM that then answers your questions about the image as if it was the one that saw it. The same approach for allowing you to speak to the AI—a separate voice-to-text model translates your audio-to-text, the LLM takes it as input and responds, sending its output to a text-to-speech model that creates the audio you hear back.

A newer approach to multi-modal involves actually processing the image or audio into a token format that the LLM can then work with. The thought is that this could result in a deeper level of capabilities.

Previously, agents like Perplexity required developers to build. Agents are now entering a new phase where those without coding skills can create them by putting them together like steps in a HubSpot workflow.

There's another form of agent considered non-DAG (think not workflow based), these agents create their own plans to solve a problem. They have access to using multiple tools/skills, and can choose when and how to use them. These are almost generalists while workflow based agents are more specialist. Because agents and agentic systems can talk to each other, these generalist agents can produce a lot of value by talking to the workflow based agentic systems behind the scenes.

There's a lot of opportunity here as a developer to build not just more sophisticated AI agents than non-developers can, but importantly, the building blocks behind the scenes that make these tools powerful. If you'd like to see this for yourself you can get an early picture of this by trying Agent.Ai. Agent.AI is an agent platform that supports creating primarily DAG based agents and you can use serverless functions and other programmatic techniques to process and handle information and requests.

Developers, if you haven't started already, the time is now to start learning and playing with AI tools. Not tomorrow, not next week. Use them in your daily life right now. Try open source local models, try the APIs, try the tools like ChatGPT, Cursor, the GitHub Copilot family of tools. The more you use them, the more you'll start to separate what the LLM is doing and what is going on. You'll start to see patterns in what they are good at and what they struggle with. You'll start thinking of your own ways to create agents, solve for gaps that others may not see, see the tricks behind the magic. The longer that you wait, the more the advancements will look like magic and the harder it will be to learn and decipher what's really happening behind the scenes.

"Any sufficiently advanced technology is indistinguishable from magic." - Arthur C. Clarke

Be the magician who knows how to perform that magic. To be that magician, you must first learn the terms and the concepts around how AI works - which may sound as foreign and as odd to the average person as incantations and spells. Then apply the knowledge you have already acquired in your career as a developer to the newfound generational AI knowledge.

I think it's a safe prediction that over this next year, you'll be hearing from HubSpot and the HubSpot developer relations team when it comes to AI. Personally, I want to demystify the AI tools and teach you to be that magician, help you to secure your future, and selfishly, I wanna see the cool things ya'll are gonna build.

The only way to express the excitement we as developers should have is to quote a silly recruiter that worked with me as I came to HubSpot - who in turn was quoting a favorite show of mine, Doctor Who:

Allons-y! (French for, "Let's go!")